简化版屏幕空间反射实现

屏幕空间反射SSR核心实现思路

Github源码传送门————>SimpleScreenSpaceReflection

Bilibili教程传送门————>小祥带你实现屏幕空间反射SSR

整体思路

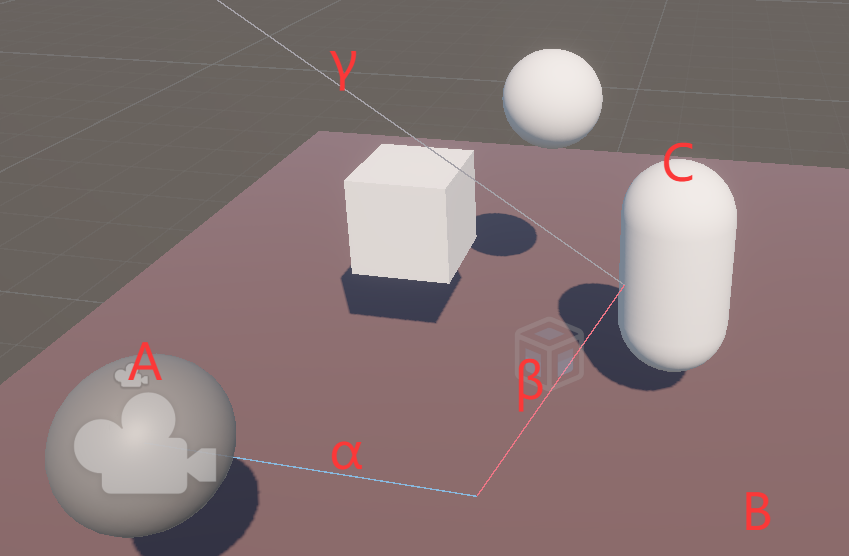

目前游戏中最常用的反射的实现,是借助反射探针去采样四面八方的环境贴图来提供给物体去计算和绘制反射产生的像,但是动态物体怎么办,不断更新环境贴图显然开销巨大,有没有更好的办法呢?我们先来看一下现实世界反射是如何产生。以下方示意图为例,地球online中倒影的形成是光线γ照射到物体C,在物体C表面反射之后产生反射光线β,β继续前进遇到地面B再次反射产生反射光线α,进而传入我们眼睛(相机)就形成了物体C在地面B倒影的像。那在我们游戏引擎中呢,要在地面绘制物体C的倒影,其实就是要寻找地面B上反射点像素对应的物体C的色值,那我们以相机为起点,向视线方向发射一条光线,逆向走一遍反射流程,经地面B反射击中物体C,就知道需要的物体C对应像素的色值。那么这个色值信息是从哪里获取的呢,当然是经过渲染管线渲染出的全屏纹理了,我们从物体C被光线击中的点,采样渲染结束后的全屏纹理,获取颜色信息进行全屏反射绘制即可,这就是屏幕空间反射SSR的基本实现思路。但是这个实现有一个显而易见的问题,我们从绘制结束后的全屏纹理采样反射的颜色信息,那这么一张二维贴图何德何能提供三维世界的数据,一旦产生遮挡等问题采样不到颜色信息就会产生反射错误,所以SSR在一般的光栅化渲染管线中效果一般,只有加入了光线追踪才能说是完全体,但是即使在光栅化中效果不佳,但依旧是性能最友好的实现了,所以我们还是需要了解一下实现。

SimpleSSR.compute

1 | |

- RWTexture2D可随机读写纹理,在Pass中绑定纹理资源的时候需要开启随机读写

- SSR中处理每个像素时相关计算都很独立,所以使用ComputeShader进行GPU并行计算降低计算开销,SV_DispatchThreadID这个传入参数是线程ID,如果一个线程处理一个像素,那就是当前线程像素坐标(positionSS)

- 采样深度贴图得到当前像素深度值depthWS,根据深度值反推得到当前像素对应的世界空间坐标positionWS,进而计算得到实现视线方向(摄像机位置指向当前像素的方向)viewWS,通过法线贴图拿到法线向量normalWS后,就可以计算反射方向reflectionWS

- float3 rayWS = positionWS + normalWS * _RayOffset 这里让光线起点沿着法线方向偏移一点,防止直接击中当前像素自己

- ComputeNormalizedDeviceCoordinatesWithZ这个方法计算得到的值xy分量是裁剪空间的坐标rayCS.xy,z分量是NDC空间的深度值rayDepth,同时从深度贴图采样一个深度值sceneDepth,两者距离在容忍度**_Thickness内的话就判定命中,命中之后采样_CameraOpaqueTexture**就拿到当前像素(positionSS)对应的反射像素(raySS)的色值,写入到贴图供后续使用

- 反射是与材质相关的,一般是越光滑反射出的像越清晰,所以需要在GBuffer中写入相关数据,如果是前向渲染需要自己维护,这里直接使用延迟渲染,GBuffer2的A通道存储了当前像素的smoothness值,

PerceptualSmoothnessToPerceptualRoughness方法转为感知粗糙度,作为参数控制SSR强度

SimpleSSRRenderPass.cs

1 | |

- descriptor.enableRandomWrite = true; 创建纹理的时候开启随机读写

- FindKernel获取ComputeShader中对应GPU线程函数的索引,后续通过索引配置参数

- DispatchCompute指定线程组数量,提交kernel给GPU执行

BlitSSR.shader

1 | |

- _SSRColorTexture的A通道存储ssr强度,作为系数混合相机画面和SSR画面

优化方向

- 借助mipmap金字塔高效产生模糊效果,在反射效果不佳的区域适当添加模糊

- 目前我们的光线步进检测是在三维空间进行,可以通过DDA算法等降维到二维空间进行计算,又能降低一些开销

- 还可以添加菲涅尔效应,更好地平衡反射颜色与漫反射颜色的混合

- 如果SSR没有击中或者采样不到颜色信息,还可以fallback到天空盒或者反射探针的信息作为最后的兜底

完结撒花~

简化版屏幕空间反射实现

https://baifabaiquan.cn/2025/08/22/SimpleScreenSpaceReflection/